robots.txt

Default settings are output for /robots.txt for sites on a WordPress environment. The output of /robots.txt can be modified with WordPress actions and filters.

In addition to an environment’s /robots.txt, an x-robots-tag: noindex, nofollow HTTP response header is returned for all requests made to:

- Production environments that do not yet have a custom domain assigned as the primary domain.

- Non-production environments at all times regardless of the domains assigned to them.

Default output

Unlaunched WordPress production environments and non-production environments often do not have a primary domain assigned to them and are accessed by their convenience domain. Environments that are accessed by their convenience domain have default settings applied to their /robots.txt that are intended to prevent search engines from indexing content that should not be publicly accessible.

The default output of /robots.txt for environments with a convenience domain:

# Crawling is blocked for go-vip.co and go-vip.net domains

User-agent: *

Disallow: /Override default output

Custom modifications for /robots.txt should be tested on a non-production environment before applying them to a launched production environment.

To temporarily override the default /robots.txt output on an environment that is accessible by a convenience domain, add the following code to a file in /plugins or /client-mu-plugins:

remove_filter( 'robots_txt', 'Automattic\VIP\Core\Privacy\vip_convenience_domain_robots_txt' );Modify /robots.txt output

Prerequisite

The output of a site’s /robots.txt can only be modified if:

- The site’s environment (production or non-production) has a custom domain set as the primary domain.

- A temporary method is in place to override default

/robots.txtoutput set by the platform.

To modify /robots.txt for a site, hook into the do_robotstxt action or filter the output by hooking into the robots_txt filter.

In most cases, custom code to override the default output of /robots.txt can be added to a theme’s functions.php.

For WordPress multisite applications, custom code that defines more specific search engine crawling directives can be added as a plugin. The custom plugin can then be enabled per-network site(s).

Action

In this code example, the do_robotstxt action is used to mark a specific directory as nofollow for all User Agents:

function my_robotstxt_disallow_directory() {

echo 'User-agent: *' . PHP_EOL;

echo 'Disallow: /path/to/your/directory/' . PHP_EOL;

}

add_action( 'do_robotstxt', 'my_robotstxt_disallow_directory' );Filter

In this code example, the output of /robots.txt is modified using the robots_txt filter:

function my_robots_txt_disallow_private_directory( $output, $public ) {

$output .= 'Disallow: /wp-admin/' . PHP_EOL;

$output .= 'Allow: /wp-admin/admin-ajax.php' . PHP_EOL;

// Add custom rules here

$output .= 'Disallow: /private-directory/' . PHP_EOL;

$output .= 'Allow: /public-directory/' . PHP_EOL;

return $output;

}

add_filter( 'robots_txt', 'my_robots_txt_disallow_private_directory', 10, 2 );Disallow AI crawlers

Use the robots_txt filter to configure a site’s /robots.txt to disallow artificial intelligence (AI) crawlers from crawling a site.

Note

Additional restriction to a site’s content can be put in place for AI crawlers with the VIP_Request_Block utility class.

In this code example, a site’s /robots.txt is configured to disallow requests from User Agents of well-known AI crawlers (e.g. OpenAI’s GPTBot).

Only 4 AI crawlers are included in this code example, though far more exist. Customers should research which AI crawler User Agents should be disallowed for their site and include them in a modified version of this code example.

function my_robots_txt_block_ai_crawlers( $output, $public ) {

$output .= '

## OpenAI GPTBot crawler (https://platform.openai.com/docs/gptbot)

User-agent: GPTbot

Disallow: /

## OpenAI ChatGPT service (https://platform.openai.com/docs/plugins/bot)

User-agent: ChatGPT-User

Disallow: /

## Common Crawl crawler (https://commoncrawl.org/faq)

User-agent: CCBot

Disallow: /

## Google Bard / Gemini crawler (https://developers.google.com/search/docs/crawling-indexing/overview-google-crawlers)

User-agent: Google-Extended

Disallow: /

';

return $output;

}

add_filter( 'robots_txt', 'my_robots_txt_block_ai_crawlers', 10, 2 );Discourage search engines

If the content of a WordPress environment with an assigned primary domain should not be accessible for indexing by search engines, programmatically modify the output of /robots.txt with the desired settings for access.

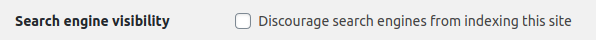

In addition, a setting in a site’s WordPress Admin dashboard can be enabled to discourage search engines.

- In the WP Admin, select Settings -> Reading from the lefthand navigation menu.

- Toggle the setting labeled “Search engine visibility” and enable the option “Discourage search engines from indexing this site”.

- Select the button labeled “Save Changes” to save the setting.

Purge cache for robots.txt

A site’s /robots.txt is cached for long periods of time by the page cache. After changes are made to /robots.txt, the cached version can be purged by using the VIP Dashboard or VIP-CLI.

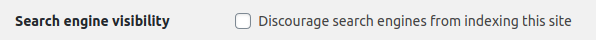

The cached version of /robots.txt can also be cleared from within the WordPress Admin dashboard.

- In the WP Admin, select Settings -> Reading from the lefthand navigation menu.

- Toggle the setting of Search engine visibility, and select the button labeled “Save Changes” each time the setting is changed.

Last updated: March 05, 2025